About

The Extreme-scale Discontinuous Galerkin Environment (EDGE) is a solver for hyperbolic partial differential equations with emphasis on seismic simulations. EDGE targets model setups with high geometric complexities and at increasing the throughout of extreme-scale ensemble simulations. The entire software stack is tailored to the execution of “fused” simulations, which allow to study multiple model setups within one execution of the forward solver.

EDGE’s core is open-source under the permissive BSD 3-Clause license. Additionally, EDGE’s user and developer guide are CC0‘d. Many of EDGE’s assets, e.g., providing example setups or scripts for benchmarks, are typically CC0’d or licensed under BSD 3-Clause as well.

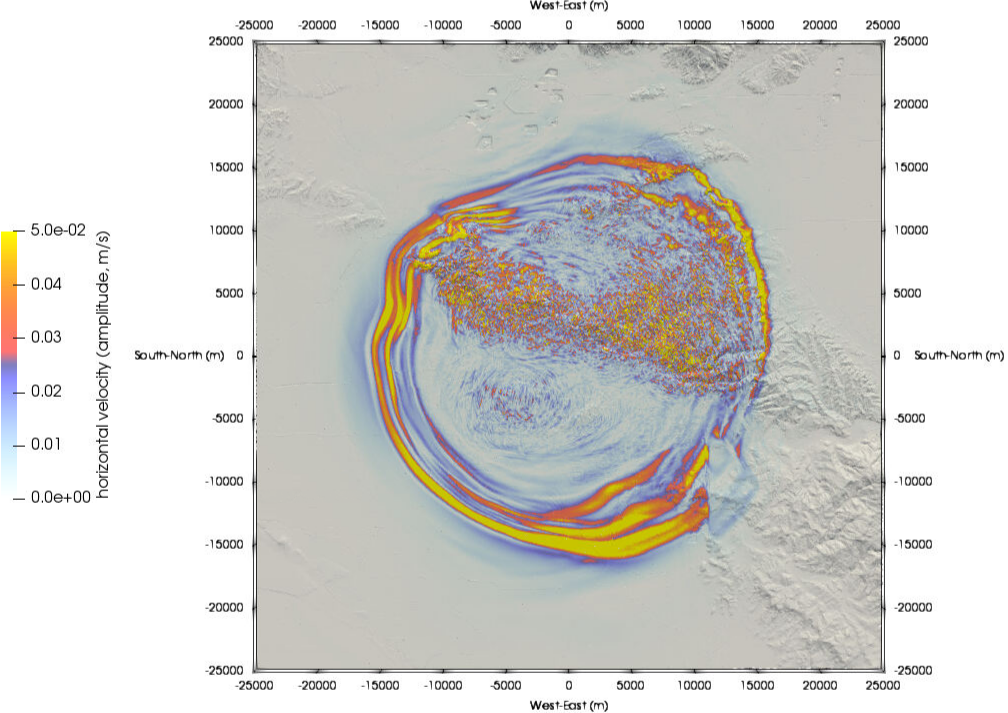

Illustration of the seismic wave field for a simulation of the Mw 5.1 La Habra Earthquake. The simulation was conducted on the supercomputer Frontera and is part of the Southern California Earthquake Center’s verification and validation project High-F.

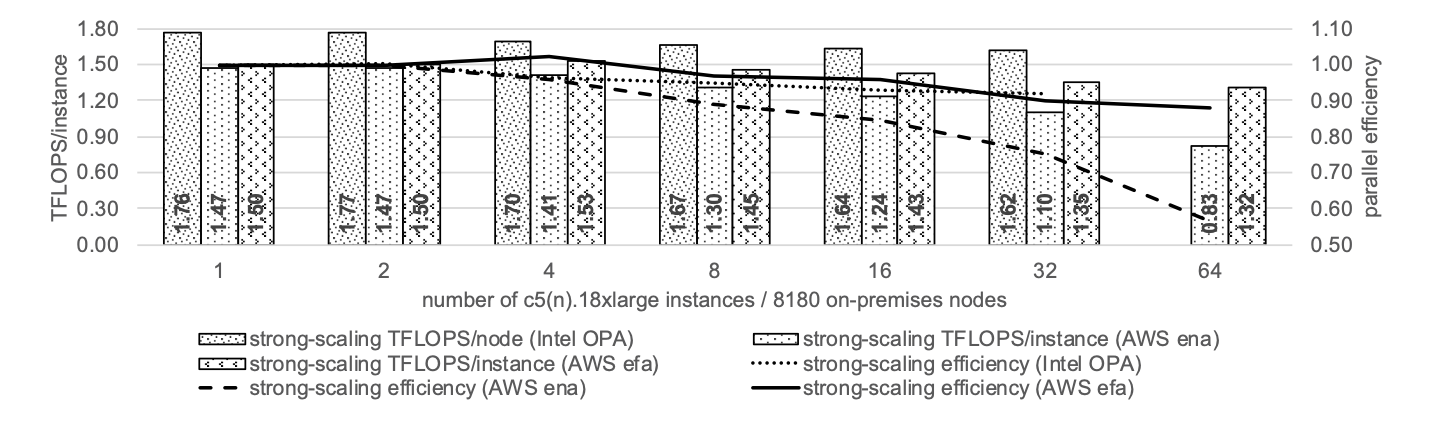

Strong scalability of the solver EDGE in AWS EC2 on c5.18xlarge and c5n.18xlarge instances. The results are compared to an on-premises cluster with Intel OPA.

Contact

Alexander Breuer

Scalable Data- and Compute-intensive Analyses lab

Friedrich Schiller University Jena

Faculty of Mathematics and Computer Science

Institute of Computer Science

Fürstengraben 1

07743 Jena

Germany

Acknowledgements

EDGE’s protoyping efforts have been supported by an Intel Parallel Computing Center (Intel PCC) at the San Diego Supercomputer Center (SDSC). Support for spontaneous rupture simulations was initiated through the Southern California Earthquake Center (SCEC) contribution #16247: “Increasing the Efficiency of Dynamic Rupture Simulations by Concurrently Executed Forward Runs”. The development of nonlinear earthquake simulations was supported by the SCEC contribution #18211: “Nonlinear Earthquake Simulations Through Robust and Accurate A Posteriori Sub-Cell Limiting”.

High performance computing resources are required for development, testing, and accurate simulations. EDGE ran in the Oracle Cloud Infrastructure, Amazon Elastic Compute Cloud and Google Cloud Platform. EDGE ran on Frontera, Cori Phase 2, Theta, Stampede 2, Blue Waters, Comet, and Endeavour. This research is part of the Frontera computing project at the Texas Advanced Computing Center (TACC). Frontera is made possible by National Science Foundation award OAC-1818253. This research was supported by the AWS Cloud Credits for Research program. This research used resources of the Google Cloud. This research used resources of the National Energy Research Scientific Computing Center (NERSC), a DOE Office of Science User Facility supported by the Office of Science of the U.S. Department of Energy under Contract No. DE-AC02-05CH11231. This research used resources of the Argonne Leadership Computing Facility (ALCF), which is a DOE Office of Science User Facility supported under Contract DE-AC02-06CH11357. This work used the Extreme Science and Engineering Discovery Environment (XSEDE), which is supported by National Science Foundation grant number ACI-1053575. This research is part of the Blue Waters sustained-petascale computing project, which is supported by the National Science Foundation (awards OCI-0725070 and ACI-1238993) and the state of Illinois. Blue Waters is a joint effort of the University of Illinois at Urbana-Champaign and its National Center for Supercomputing Applications.

EDGE heavily relies on contributions of many authors to open-source software. This software includes, but is not limited to: ASan (debugging), Catch (unit tests), Clang (compilation), Cppcheck (static code analysis), Easylogging++ (logging), ExprTk (expression parsing), GCC (compilation), Git (versioning), Git LFS (versioning), Sphinx (documentation), Gmsh (meshing, mesh interface), GMT (DEM pre-processing), HDF5 (I/O), jekyll (homepage), LIBXSMM (matrix kernels), METIS (partitioning), ParaView (visualization), PROJ (map projections), pugixml (XML interface), SCons (build tool), UCVMC (velocity models), Valgrind (memory debugging), Visit (visualization).